Spotting Trouble in the Data Science Market Place — The Good and The Bad Patterns

A black box is only mysterious if you call it that way.

A bad predictive model can do more than just lack accuracy, it will drain wallets and hurt people. So, here are some warning flags to watch out for, whether you’re a buyer or seller, when navigating the unnecessarily mysterious and opaque data science marketplace.

Transparency

Transparency and reproducibility have done wonders for science, it can do wonders for sales too. If you cannot reveal your secret sauce, find other ways for prospective customers to measure your claims. We’re talking data science here, so customer testimonials won’t cut it. For example, offer the prospective customer an access point to upload data and return a quantitative analysis report sample.

Green Flag

If you’re giving your customers all the tools, the code, and the know-how to reproduce whatever you are selling, you’re top-shelf — you get the big, fat green flag, nothing left to discuss.

If you feel you cannot do so, you need to clearly demonstrate why and early on. A black box is only mysterious if you call it that way.

You could be in the exclusivity business where your IP is your value proposition, like that of a competitive trading algorithm. If too many get their hands on it, it could risk diluting or even killing its predictive abilities. Keeping it secret is justified.

You could be running a software-as-a-service intelligence business (SaaS) where the whole point is the convenience of quick answers. Most of us don’t want to be slowed down by “hows” and “whys” when getting recommendations for a book or a movie, we just want the “whats”.

Whatever the case may be, be transparent and upfront about it.

Red Flag

Lack of justifiable transparency for any product is a sign of snake oil. In my healthcare days, I was often called in to sniff out bullshit whenever a vendor would come knocking. The one case that jumps to mind was a group selling a medical readmission model and refusing to let us test it. I was a bit inexperienced back then and failed to immediately hit the “DEFCON 1” button and have them thrown out.

We eventually offered to give them an out-of-sample data set of anonymized patients so they could run it through their models and return a simple list of probabilities of readmission for each patient. The excuses kept flowing in. Big, big red flag and a big waste of time. Obviously, they didn’t get our business. It is just sad to think that others may fall prey to such charlatanry.

Team Work

This has been the biggest secret of my success as both a builder, buyer and seller of data science — and it’s made me many, long-term friends to boot.

Green Flag

Vendors that adopted me and made me part of the team, generous with their knowledge, patiently answering my questions (even the annoying ones where I’d change the question just enough to give me a second chance of not feeling like an idiot), were always invited back to the table for more projects.

And on the sell side, I return the favor. I always bring in whoever has the desire or interest to become that “colleague in residency”. This has saved my butt on so many occasions.

They become allies straddling both worlds. They’ll give you a heads-up when veering off-road, and nudge you when additional details or an extra layer of transparency can smooth a bumpy ride. More importantly, they’ll legitimize your efforts and almost guarantee that your work gets implemented.

Red Flag

This is not an exclusive data science problem, but being referred to as “the vendor” or “the contractor” during meetings doesn’ t imbue any sense of long-term partnership or respect.

The same goes for introductory meetings where those sitting on the other side try to pry as many proprietary nuggets as possible — you can practically see the cogs in their heads working to reverse-engineer every word out of your mouth. Or the “quickie” client trying to pay you in increments of an hour and divest you of as much source code as possible in a short afternoon… I know we’re not cheap, but c’mon people, that’s why they created the Stats Stack Exchange.

“Real” Production Experience

Things move fast in this domain. Asking somebody how many times they released a model in production based on source code from a week-old GitHub repo is disingenuous. That said, Tensorflow, word2vec, Keras are cutting edge but have been around long enough to have been implemented in production plenty of times.

Green Flags

Being able to show a track record of “real” models released into production for “real” customers, whether reports, CRM integrations, smart workflow add-ons, is a huge green flag.

It doesn’t matter if they haven’t solved the problem you are facing, or even if they’ve never worked in your domain, “real” production modeling experience trumps anything else. Remember that big data is big data, IoT is IoT, distributed modeling is distributed modeling, and they’re all domain agnostic.

Those teams are rare and light-years ahead of the pack.

Red Flags

“We don’t have a customer yet but you should see the scores we got while backtesting it!”. No, not really, a standalone model running out of a Jupyter notebook yielding a comma-delimited file has practically nothing in common with a distributed modeling system injecting real-time results into a command center, regardless of backtests. Reminds me of those ads for currency trading bots promising to make you rich in your sleep. It’s either making somebody very rich at Renaissance Technologies or it’s being sold to suckers, you — not much ground in-between.

Unfortunately, most modeling shops out there have little experience with production pipelines. Those that have a lot of experience building data pipelines at scale have little modeling know-how. Both scenarios are red flags.

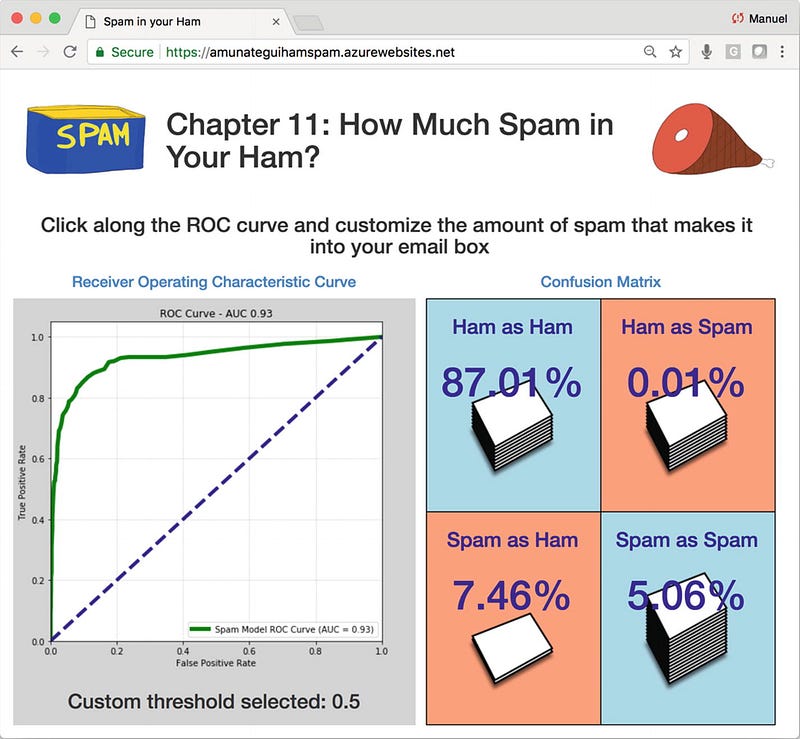

Confusion Matrix

Clients that understand this simple tool, love it! Those that don’t understand it, well, make that your first order of business. It’s a communication gem, a magical nerd-to-C-suite translating device. It may say “confusion” in it, but it’s only confusing to those that can’t read it.

It not only shows how many predictions your model nailed, but it also, and almost more importantly, how many false ones were labeled as true and true ones, labeled as false. It’s with a confusion matrix that you can understand the operationalization costs of any model (check out my video introducing this great tool: https://www.youtube.com/watch?v=BAe0Jd12Vpc ).

Green Flag

Those that can read this matrix and that teach prospective customers how to interpret it, get the green flag award of the year!

Let’s take the confusion matrix for a sepsis risk healthcare model as an example. It will tell you how many patients to see before finding an actual sepsis infection. If it can find one actual case out of four false positives, this hospital will have to budget enough medical pipeline to test 5 patients for every sepsis infection they want to catch. From there they can count how many medical practitioners they’ll need, how much medical facility to rent, the labs, liability, parking, and so on and so forth until they get down to raw dollars… That’s the clincher, we all speak dollars and sense —and that’s what the confusion matrix tells you.

Red Flag

Long story short, anybody that can’t read it, or lies about it. I remember a customer, supposedly well versed in data science, completely failing to appreciate that few models, with the exception of modeling the Iris data set, yield zero false positives. If such an animal existed, it would be a unicorn, and you wouldn’t be buying or selling it, you’d be worrying whether the hull of your yacht will be big enough from all your stock-market winnings.

But if you really, really, insist on a zero false-positive model, then I have one right now — its miraculously ready to go and has absolutely, 100% guaranteed zero-false positives, and I’ll even throw in a beautiful bridge from Brooklyn for free!

Anyhoo…

I’ve built and sold too many of these in the last decade to not recognize the good and bad patterns from miles away. Transparency and honesty will always be your best defense. If you sense trouble with your model, the data, or the people, mention it and do so early on. The longer you sit on it, the more stressful it will become and the more humble pie you’ll have to eat.

When buying a model or looking for a data science team, no question is too stupid. Ask especially pointed questions within the context of your workflow, the culture of your operations, and the people it will impact.

Reach out if you have more questions or need help selecting a team or data science model, https://www.viralml.com/#contactme